ai

artificialintelligence

Introduction to Artificial Intelligence

This article will answer the question: what is Artificial Intelligence? To explain that, we will go through the history of AI, how it works, the different types of AI, applications & future developments.

Artificial intelligence is a concept in computer science that has been with us for centuries. For example, when we think of the Greek myths from antiquity about Hephaestus' mechanical servants built for him by Zeus and Eurymedusa or when in the 19th century, Mary Shelley's Frankenstein tells the story of a human-made monster created with parts from dead people. Also, in 1920, Fritz Lang directed the movie Metropolis where machines were taking over society, and 20 years later, Isaac Asimov described robots in his book "I, Robot."

So since then, there has always seemed to be something fascinating about creating an entity that somehow functions as a human intelligence but better. And with today's technological advancements, it doesn't seem so far-fetched anymore to create an entity that can at least mimic the functions of a human being.

Artificial Intelligence (AI) Explained

Artificial intelligence is the science of making computers act like humans. AI can be used to solve problems that humans solve using their intelligence. There are different types of AI, like machine learning (which is the most widely used) and deep learning.

A brief history of Artificial Intelligence

The term artificial intelligence was coined in 1955 by John McCarthy at the Dartmouth Conference. Many AI pioneers gathered for the first time to discuss their plans and ideas about advancing this relatively new field. Alan Turing is credited with creating the theoretical basis for modern AI in his paper "Computing Machinery and Intelligence" (1950).

The first machines that could be called AI were created in the early 1950s when scientists began to design programs that could learn and solve problems independently. These so-called "machine learning" algorithms could analyze data and recognize patterns, then be used to make predictions or decisions. In the 1960s, early pioneers in AI started to create computers that could reason, understand natural language and even carry out simple conversations.

In the late 1980s and 1990s, a new wave of AI technologies emerged, including neural networks, which are systems that simulate the workings of the brain.

- (1943) McCullough and Pitts publish "A Logical Calculus of Ideas Immanent in Nervous Activity." The article introduced the first mathematical model for constructing a neural network.

- (1949) Donald Hebb, in his book The Organization of Behavior: A Neuropsychological Theory, proposed that neural pathways are formed from experiences. The more frequently neurons are utilized, the stronger their connections become. Hebbian learning is still a critical paradigm in AI.

- (1950) Turing published "Computing Machinery and Intelligence," which provided the basis for modern artificial intelligence (AI) research. The Turing Test is a method for determining whether a computer is intelligent, as proposed by Turing.

- (1950) Marvin Minsky and Dean Edmonds develop the first neural network computer, SNARC, at Harvard University.

- (1950) Claude Shannon publishes the paper "Programming a Computer for Playing Chess."

- (1950) Isaac Asimov released the "Three Laws of Robotics," widely regarded as his greatest work.

- (1952) The program for playing checkers was created by Samuel Arthur.

- (1954) The Georgetown-IBM machine translation project automatically translates 60 carefully selected Russian phrases into English.

- (1956) At the Dartmouth Summer Research Project on Artificial Intelligence, John McCarthy coined artificial intelligence. The conference, which set the parameters and objectives of AI, is widely regarded as the birth of true AI.

- (1956) Logic Theorist (LT), the first reasoning software developed by Allen Newell and Herbert Simon.

- (1958) John McCarthy co-creates Lisp, an AI programming language, and published the essay "Programs with Common Sense." The paper imagined a hypothetical Advice Taker, a fully functional AI system capable of learning from experience in the same way that people can.

- (1959) The General Problem Solver (GPS) is a program developed by Allen Newell, Herbert Simon, and J.C. Shaw to mimic human problem-solving.

- (1959) Herbert Gelernter creates the Geometry Theorem Prover program.

- (1959) At IBM, Arthur Samuel develops the term machine learning.

- (1959) The MIT Artificial Intelligence Project was founded by John McCarthy and Marvin Minsky.

- (1963) The AI Lab at Stanford was founded by John McCarthy.

- (1966) The U.S. government's Automatic Language Processing Advisory Committee (ALPAC) report documents the lack of advancement in machine translation research, a critical Cold War project promising automatic and immediate translation from Russian. All government-funded MT projects are canceled as a result of the ALPAC report.

- (1969) The first successful expert systems are developed in DENDRAL, a XX program, and MYCIN, designed to diagnose blood infections, are created at Stanford.

- (1972) PROLOG was created as a logic programming language.

- (1973) The British government releases the "Lighthill Report," which documents AI disappointments, leading to severe budget cuts for AI research.

- (1974-1980) Frustration with AI's development causes significant DARPA cuts in academic research grants. Artificial intelligence funding fades away, and research stalls when coupled with the prior ALPAC study and last year's "Lighthill Report." The "First AI Winter" is the result of this time.

- (1980) The first successful commercial expert system, R1 (also known as XCON), was created by Digital Equipment Corporations. R1 is intended to set up orders for new computer systems and kick off an investment boom in expert systems that lasts through much of the 1990s, effectively ending the first "AI Winter."

- (1982) The Japanese Ministry of International Trade and Industry launches the ambitious Fifth Generation Computer Systems project. The objective of FGCS is to create a supercomputer-like performance level and a platform for AI research.

- (1983) In response to Japan's FGCS, the United States government established the Strategic Computing Initiative (SRI) to finance cutting-edge technology research at DARPA.

- (1985) Expert systems and an entire industry known as the Lisp machine market develop to support them. Symbolics, for example, and Lisp Machines Inc. build specialized computers to execute the AI programming language Lisp.

- (1987-1993) The Lisp machine market collapsed in 1987, ushering in the "Second AI Winter" as computing technology improved and cheaper alternatives appeared. Expert systems proved too expensive to maintain and upgrade, eventually becoming obsolete.

- (1991) The United States military deployed DART, an automated logistics planning and scheduling software, during the Gulf War.

- (1992) In 1992, the Japanese government expels all remaining personnel from the FGCS project and blamed it for failing to achieve its ambitious objectives.

- (1993) In 1993, DARPA shuts down the Strategic Computing Initiative after spending almost $1 billion and failing to meet many of its goals.

- (1997) IBM's Deep Blue wins against world chess champion Gary Kasparov.

- (2005) The DARPA Grand Challenge is won by a STANLEY self-driving vehicle.

- (2005) The U.S. military begins funding research and development in autonomous robots like Boston Dynamics' "Big Dog" and iRobot's "PackBot."

- (2008) Google makes significant advances in speech recognition, introducing it to the iPhone app.

- (2011) On "Jeopardy!," IBM's Watson beats the competition.

- (2011) Apple releases Siri, an artificial intelligence-based virtual assistant for its iOS operating system.

- (2012) 10 million YouTube videos are used as a training set by Mr. Ng, the Google Brain Deep Learning project creator, to feed a neural network. The breakthrough era for neural networks and deep learning funding began when a cat was recognized without being told what a cat was.

- (2014) The first self-driving car from Google to pass a state driving exam.

- (2014) Amazon's Alexa, a virtual personal assistant, is now available.

- (2016) The computer program developed by Google's DeepMind defeated the world Go champion, Lee Sedol. The ancient Chinese game's complexity was seen as an impediment to AI progress.

- (2016) Sophia, the world's first "humanoid robot citizen," was developed by Hanson Robotics and has face detection, verbal communication, and facial expression abilities.

- (2018) The release of BERT, a natural language processing engine that lowers the difficulties encountered by machine learning applications in translation and comprehension.

- (2018) Waymo announces the debut of its Waymo One service, which allows customers in the Phoenix metropolitan area to hail a self-driving car from anywhere.

- (2020) During the early phases of the SARS-CoV-2 epidemic, Baidu releases its LinearFold AI algorithm to research and medical teams working on a vaccine. The method can predict the viral RNA sequence in just 27 seconds, 120 times faster than previous approaches.

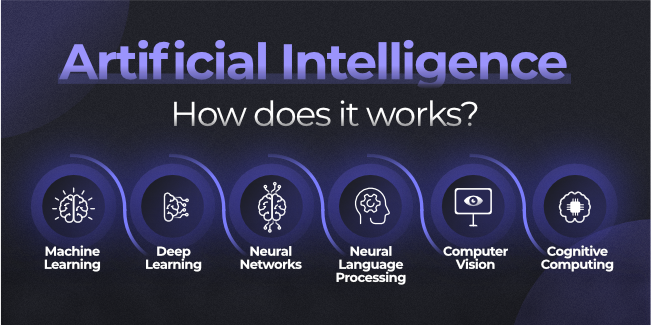

How does Artificial Intelligence work?

It takes time and effort to understand what an artificial intelligence system is made up of, how it functions and achieves goals. The goal of an AI system is to mimic human behavior, but achieving this necessitates that we reverse-engineer human traits and abilities in a machine and apply its computing power beyond our ability.

To fully comprehend how artificial intelligence works, you must first learn about the many subcategories of Artificial Intelligence and how they may be applied to various industries.

Machine Learning

ML teaches a machine to draw conclusions and judgments based on previous experience. It discovers trends, analyzes historical data to determine the significance of these data points, and reaches a possible conclusion without requiring human input. This automation in reaching conclusions by evaluating data saves businesses time and aids them in making better decisions.

Deep Learning

The term "deep learning" refers to a type of machine learning. It teaches a machine to process data through several layers to classify, guess, and predict the result.

Neural Networks

Neural Networks are artificial neural networks that mimic the way human brains function. They're algorithms that capture the link between numerous underlying variables and convert the information into a form comparable to how a person's brain works.

Natural Language Processing

It is the science of reading, comprehending, and interpreting a language by a machine. When a computer understands what the user intends to communicate, it responds appropriately.

Computer Vision

A computer vision algorithm is a program that analyzes an image and breaks it down into smaller pieces to understand it. It aids the machine in classifying and learning from a set of pictures to produce a better output decision based on prior experiences.

Cognitive Computing

Cognitive computing algorithms attempt to mimic a human brain by analyzing text/speech/images/objects in such a way as to produce the intended conclusion.

What are the Types of Artificial Intelligence?

3 Types of Artificial Intelligence

- Artificial Narrow Intelligence (ANI)

- Artificial General Intelligence (AGI)

- Artificial Super Intelligence (ASI)

What is Artificial Narrow Intelligence (ANI)?

It is the most popular kind of AI available today. It is the form of Artificial Intelligence that exists now. These Artificial Intelligence systems are designed to deal with a single issue and perform a specific activity well. They have limited capabilities, such as suggesting a product for an e-commerce customer or forecasting the weather. They can approximate human performance in some situations and even exceed it in others. Still, they only succeed in very controlled environments with a restricted range of variables.

What is Artificial General Intelligence (AGI)?

Although AGI is still a theoretical idea, it has been researched for some time. It's defined as AI with human-level cognitive function in many domains, such as language processing, picture processing, computational function, and reasoning, to mention a few.

We're still a long way from creating an AGI system. An AGI system would need to be made up of thousands of Artificial Narrow Intelligence systems that collaborate to mimic human reasoning. It has taken 40 minutes to simulate a single second of neuronal activity using the most cutting-edge computers and infrastructures, such as Fujitsu's K or IBM's Watson. It reflects both the extreme complexity and interconnectedness of the human brain and our inability to create an AGI with our current resources.

What is Artificial Super Intelligence (ASI)?

The idea of artificial superintelligence has been around for a long time. It's no surprise that it's now coming to fruition, and people are starting to take notice. An Artificial Super Intelligence (ASI) system would be able to surpass all human capabilities. It includes decision-making, making sensible judgments, creating more beautiful art, and building emotional relationships.

Once we achieve Artificial General Intelligence, AI systems will be able to enhance their capabilities rapidly and advance into areas that we may never have imagined. While the gap between AGI and ASI would be relatively small (some claim it's as little as a nanosecond because artificial intelligence would learn at that pace), the long road ahead of us toward AG.

What is the Purpose of Artificial Intelligence?

Artificial Intelligence aims to assist humans in performing more sophisticated calculations and making important decisions. From a philosophical standpoint, Artificial Intelligence has the potential to allow people to live more prosperous, more purposeful lives without having to work hard. That's from a technological perspective.

Artificial Intelligence is often regarded as humanity's last invention, a technological wonder that would revolutionize how we live our lives. It has also been called our Final Invention, a technology that will create ground-breaking tools and services that would dramatically improve how we lead our lives by hopefully eliminating conflict, inequality, and human suffering.

All of this is still some time in the future. We're a long way from achieving such results. Artificial Intelligence is presently being used by businesses to improve process efficiency, automate resource-heavy activities, and make business predictions based on hard data rather than intuition. Because all prior technologies have required corporate and government subsidies to develop. It will require similar investment and research efforts from businesses and governments before this technology becomes available to the general public.

Where is Artificial Intelligence (AI) Used?

AI technology is used in various industries to provide insights into user behavior and offer suggestions based on the data. For example, Google's predictive search algorithm took past user information to anticipate what a user would next type in the search bar. Using past user information, Netflix recommends what movie a person might want to watch next, luring consumers into the platform and boosting viewership. Facebook (Meta) uses primary facial data of users' images to offer automatic tag suggestions tailored to their facial features. Large organizations use AI everywhere to make an end user's life simpler. Artificial Intelligence technologies would be used to support the following data activities:

- Within data, and optimizing the search to provide the most relevant results.

- Advanced logic-chains for if-then reasoning that may be used to pass a sequence of commands based on variables.

- For innovative insights, use machine learning to find significant patterns in big data.

- Applied probabilistic models are used to forecast future events.

What are the Advantages of Artificial Intelligence?

Unquestionably, technology has improved our lives. From music suggestions, map directions, mobile banking to fraud prevention, AI and other technologies have taken the reigns. There is a fine line between progress and destruction. There's always a two-sided coin, no matter how good it may be. Let us examine some of the advantages of AI.

Advantages of Artificial Intelligence (AI)

- Reduction in human error

- Available 24×7

- Helps in repetitive work

- Digital assistance

- Faster decisions

- Rational Decision Maker

- Medical applications

- Improves Security

- Efficient Communication

AI in Everyday Life

Online shopping

Artificial intelligence provides customized suggestions to customers based on their previous searches and purchases in online shopping.

Digital personal assistants

Smartphones employ artificial intelligence to give personalized services. AI assistants may answer inquiries and assist customers in organizing their daily routines without difficulty.

Machine translations

AI-based language translation software may assist people in understanding different languages.

Cybersecurity

AI systems may be used to identify and combat cyberattacks by detecting patterns and retracing the assaults.

Artificial Intelligence in Healthcare

AI has been utilized in detecting, evaluating, and tracking the spread of diseases such as Covid-19.

Applications of Artificial Intelligence in business?

The fact that AI has the potential to revolutionize so many industries with a wide range of possible applications is exciting. All these various sectors and use cases have in common: they are all data-driven. Because Artificial Intelligence is essentially a data processing machine, there's a lot of potential for optimization across many industries.

- Administration in Healthcare

- Telemedicine

- Assisted Diagnosis

- Robot-assisted surgery

- Vital Stats Monitoring

- E-commerce Better recommendations

- Chatbots

- Filtering spam and fake reviews

- Optimizing search

- Supply-chain

Top Used Applications in Artificial Intelligence

- Administration in Healthcare

- Telemedicine

- Assisted Diagnosis

- Robot-assisted surgery

- Vital Stats Monitoring

- E-commerce Better recommendations

- Chatbots

- Filtering spam and fake reviews

- Optimizing search

- Supply-chain

Future of Artificial Intelligence

Humans have always been interested in technological innovations and fiction. We are now living in the most revolutionary times in our history. Artificial Intelligence has emerged as the subsequent significant development in technology. Across the world, organizations are developing ground-breaking artificial intelligence and machine learning technologies. Artificial intelligence is affecting not only the future of every industry and individual, but it has also driven new technologies such as big data, robotics, and the Internet of Things. Given its rapid expansion, it will continue to be a technological innovator for years to come. As a result, there are many appealing career options for educated and certified specialists. These technologies will have a bigger influence on society and quality of life as time goes on. Last but not least, an interesting AI was created by the University of Oxford, which debates its ethics of itself.