ai

deeplearningmethods

deeplearning

Things You Need To Know About Deep Learning

Deep learning is a subcategory of machine learning. It consists in creating neural networks that are to improve the technique of voice recognition and natural language processing. It also finds application in bioinformatics and drug design. You should consider adding Deep Learning to your app idea, as it is gaining a lot of attention these days, and for a good reason. It's achieving things that were previously thought impossible. This technique teaches computers to learn by example.

In deep learning, a computer program performs classification activities directly from pictures, text, or sound. Deep learning models can achieve state-of-the-art accuracy and even outperform human performance levels. Models are trained using many labeled data and neural network designs with many layers.

How does Deep Learning Work?

A toddler's learning to recognize the cat may be compared to developing deep-learning computers. The term deep was derived from the number of processing layers through which data must travel. Each algorithm in the hierarchy applies a nonlinear transformation to its input and generates a statistical model based on what it learns. Depending on the accuracy required, the iterations will continue until the output is adequate.

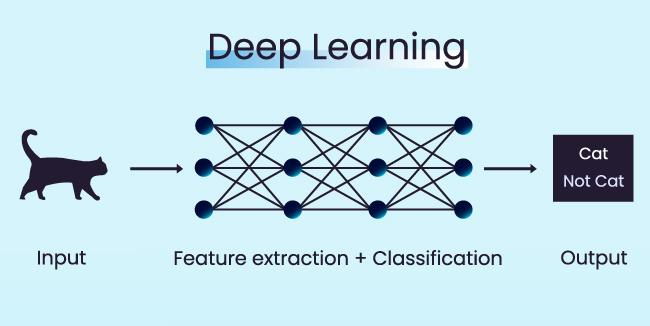

In conventional machine learning, the learning process is supervised, and the programmer must be meticulous in instructing the computer what sorts of things to look for to determine whether an image depicts a cat or not. Feature extraction is a time-consuming operation that involves separating cats into groups. The computer's success rate depends entirely on the coder's ability to define a cat feature set precisely. The benefit of deep learning is that it creates its feature set without human intervention. Unsupervised learning is both faster and more reliable than supervised learning.

The deep learning algorithms begin by receiving training data (input data), which is a set of photos for which a person has labeled each image as "cat" or "not a cat" using metatags. The program uses the data it gains from the training data to produce a feature set for cats and develop a predictive model (input layer). The predictive model gets more complex and accurate with each cycle. It will just look for patterns in the digital data.

Deep neural networks are made up of many nodes, much like the human brain. The layers are linked through intermediate nodes, referred to as hidden units. Based on the number of levels it contains, the network is deeper. Signals travel between nodes in an artificial neural network and are assigned corresponding weights. A heavier node will have a stronger influence on the following nodes. The weighted inputs are summed in the final layer (output layer) to generate an output. Because deep learning algorithms perform a lot of data crunching and require many complicated mathematical operations, they need strong hardware. Deep learning training computations can take weeks because of their complexity.

Large quantities of data are required for deep learning systems to provide accurate results; therefore, huge data sets are provided. Artificial neural networks may recognize information with the answers given to a series of binary true or false questions relating to highly intricate mathematical processes during data processing. A facial image recognition program, for example, learns to identify and recognize edges and lines of faces, then more significant elements of the faces, and finally, the complete representations of faces. Over time, the software trains itself until it becomes more accurate. With time, the facial recognition program will correctly identify people. The training process is longer than in machine learning.

Deep learning methods (Types of Algorithms used in Deep Learning)

Deep learning models are accurate and effectively tackle problems that are too complex for the human brain, with a wide range of different types:

- Convolutional Neural Networks

- Recurrent Neural Networks

- Generative Adversarial Networks

- Self-Organizing Maps

- Restricted Boltzmann Machines

- Deep Reinforcement Learning

- Autoencoders

- Backpropagation

- Gradient Descent

- Long Short Term Memory Networks

- Radial Basis Function Networks

- Multilayer Perceptrons

- Deep Belief Networks

Convolutional Neural Networks

The CNN is a more advanced and high-potential variant of the traditional artificial neural network design. It takes inspiration from the visual cortex of an animal brain's neuron arrangement order. It's designed for tackling greater complexity, preprocessing, and data compilation.

Long Short Term Memory Networks

Long-short term memory recurrent neural networks (LSTMs) are a type of recurrent neural network (RNN) that can learn and remember long-term dependencies. The typical approach is to recall information for lengthy periods. LSTMs hold on to knowledge over time. They're helpful in time-series prediction since they remember previous data. LSTMs are four interacting layers with a chain-like architecture that differs from other neural networks. LSTMs are commonly utilized for speech recognition, music creation, and pharmaceutical research, among other things.

Recurrent Neural Networks

Understanding the Inception architecture and learning techniques is crucial. The outputs from the LSTM are used to train a new classifier, which is then fed into an RNN's connections that form directed cycles, allowing the outputs from the LSTM to be used as inputs in the current phase. THANKS TO ITS INTERNAL MEMORY, the LSTM's output feeds into the current phase and may be stored. Image captioning, time-series analysis, natural-language processing, handwritten recognition, and machine translation are just a few examples of how RNNs might be used.

Generative Adversarial Networks

GANs are generative deep learning algorithms that create new instances resembling training data. GAN has two components: a generator, which learns to fabricate fake data, and a discriminator, which identifies it. The use of GANs has grown over time. They may improve astronomical pictures and simulate gravitational lensing for dark-matter studies. Video game designers employ GANs to upsize low-resolution, 2D textures in vintage games by recreating them in 4K or higher resolutions via image training.

Self-Organizing Maps

The SOMs (or Self-Organizing Maps) function with unsorted data, reducing the model's number of random variables. In this form of deep learning, the output dimension is kept at a two-dimensional model because each synapse connects to its input and output nodes. Each data point competes for its model representation. The SOM updates the weight of the nearest nodes or Best Matching Units (BMUs). The value of the weights changes based on the closeness of a BMU. In addition to serving as a node characteristic in and of itself, weight is used to represent network location.

Restricted Boltzmann Machines

The nodes of this network pattern are positioned in a circular layout, and there is no predetermined direction. Because of its distinctiveness, this deep learning approach is used to compute model parameters.

Deep Reinforcement Learning

The fundamental element of reinforcement learning is that an agent interacts with the world to modify its state. The agent may observe and respond accordingly, assisting a network in reaching its goal by interacting with the environment. There are only two layers in the network model above: an input layer, where the state of the environment is fed into, and multiple hidden levels – where the future reward of each action taken in that situation is provided into. The model operates continually to forecast each action's potential future reward.

Autoencoders

Autoencoders are a subfamily of feedforward neural networks in which the input and output are identical. In the 1980s, Geoffrey Hinton developed autoencoders to tackle unsupervised learning difficulties. They're machine-learning algorithms that mimic data from the input layer to the output layer by iteratively teaching themselves. Autoencoders are used for various purposes, including drug discovery, popularity forecasting, and image processing.

Backpropagation

The backpropagation or back-prop technique is the core mechanism for neural networks to learn about any errors in data prediction in deep learning. It can send data back about network flaws. On the other hand, Propagation refers to data transmission via a single path in a particular direction. In the instance of signal propagation in the forward order at the moment of decision, the whole system may operate according to signal propagation in the backward direction.

Radial Basis Function Networks

Feedforward neural networks with radial basis functions as activation functions are known as RBFNs. They are typically used for classification, regression, and time-series prediction. They feature an input layer, a hidden layer, and an output layer.

Multilayer Perceptrons

An MLP is a feedforward neural network that is a deep learning model with many perceptron layers that uses activation functions. The input layer and the output layer of an MLP are fully connected. They both have the same number of input and output layers. Still, they can have various hidden neurons and create speech recognition, image recognition, and machine.

Deep Belief Networks

The term "generative model" refers to DBNs, which are generative models made up of many levels of stochastic, latent variables. The latent variables are often hidden units and have binary values.

Where is the deep learning algorithm used?

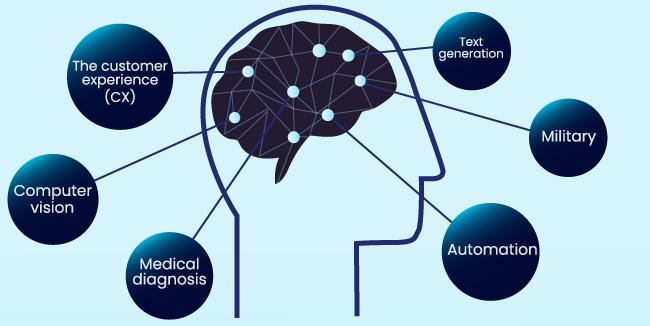

The human brain is also a deep learning computer. Because deep learning algorithms operate similarly to the human brain, they may be used for several activities that people accomplish. Deep learning is now utilized in most current image recognition applications, natural language processing (NLP), and speech recognition software. These technologies are increasingly used in various applications, including self-driving cars and language translation services.

Deep learning is being employed in a variety of fields, including the following:

- The customer experience (CX). Chatbots are already being programmed with deep learning techniques. Deep learning is anticipated to be used in various organizations to enhance CX and improve customer happiness as it matures.

- Text generation. Machines are being educated in grammar and style. They use this model to generate a completely new text identical to the original in spelling, grammar, and style.

- Military. Using deep learning to detect things from satellites that identify regions of interest and safe or hazardous zones for troops is an example.

- Automation. Deep learning enhances worker safety in factories and warehouses by delivering services that automatically detect when a person or object is getting too close to a machine.

- Medical diagnosis. Researchers in cancer have begun utilizing deep learning to examine tissues for signs of cancer.

- Computer vision. Deep learning has revolutionized computer vision, allowing computers to achieve remarkable precision in detecting and classifying objects, restoring and segmenting damaged images.

Limitations and challenges

- Deep learning models learn through training. It implies they are only aware of the data on which they were trained. If a user has a tiny amount of data or comes from one particular source that is not necessarily representative of the broader functional area, the AI will not generalize.

- Bias is another major challenge for deep learning models. It has been a thorny issue for deep learning coders. Suppose a model is trained on data that includes biases. In that case, the model will reflect those prejudices in its predictions because deep learning algorithms learn to distinguish based on subtle changes in data elements.

- The learning rate may also be a difficult problem for deep learning models. If the rate is too rapid, the model will converge prematurely, resulting in a suboptimal solution. The procedure might become stuck if the rate is too low.

- Hardware requirements for deep learning models might also create barriers. To improve efficiency and reduce time consumption, multicore high-performance graphics processing units (GPUs) and similar computing devices are required. On the other hand, these components are expensive and consume significant energy.

- Large quantities of data are required for deep learning. Furthermore, the more sophisticated and accurate models will require additional parameters, needing more data.

- Deep learning models become rigid and unable to multitask after being trained. They may only produce efficient and correct answers to a single challenge. Even attempting to tackle a similar issue would necessitate adjusting the system.

- Long-term planning and algorithm-like data manipulation are beyond the capabilities of current deep learning algorithms, even with big data, for any application that needs logic, such as programming or the scientific method.

The Position of Deep Learning in AI

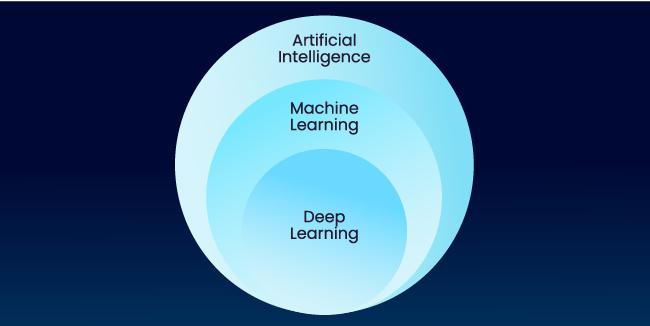

Artificial intelligence (AI), machine learning (ML), and deep learning (DL) are three increasingly common words that are often used interchangeably to describe systems or software that exhibit intelligent behavior.

Deep Learning is a type of Machine Learning and part of the larger field of Artificial Intelligence. In general, AI incorporates human behavior and cognition inside machines or systems. In contrast, ML is the technique to learn from data or experience that automates analytical model creation. "Deep" refers to a data-driven model built through data processing at many levels or stages in the deep learning method. Deep Learning also refers to learning methods that employ multi-layer neural networks with recursive processing on data.

What's the Difference Between Machine Learning and Deep Learning?

Deep learning is a type of machine learning. The first step of a machine learning process is to manually extract essential features from pictures. The parts are then used to generate a model that classifies objects in the picture. Relevant features are automatically discovered in images with a deep learning method. In addition to being more sophisticated and complex than other types of AI, deep learning also can "end-to-end learn" – that is, it can learn how to accomplish a certain task automatically by receiving raw data and a set of instructions.

Another distinction is that deep learning algorithms scale with data, but shallow learning methods do not. Shallow learning refers to machine learning techniques that cease improving at some point once you add more samples and training data to the network.

In machine learning, you manually pick features and a classifier to categorize images. Feature extraction and modeling are done automatically with deep learning. Another benefit of deep learning networks is that they typically improve with the data you feed them.

Learning Time

- Deep Learning: Long learning time

- Machine Learning: Short learning time

Data

- Deep Learning: Requires large datasets

- Machine Learning: Does not require large data sets; learn from smaller collections

Hardware requirements

- Deep Learning: Can learn on CPU but recommended there is also a GPU

- Machine Learning: The calculations are performed on the CPU

Conclusion

The growth of deep-learning models is expected to accelerate and create even more innovative applications in the next few years.